Back to blog

4 MIN READ

Product Experimentation Framework for Mobile Product Teams

PUBLISHED

1 January, 2024

Product Analytics Expert

Pretty much every product team will be familiar with product experimentation. A/B testing, feature testing, user segmentation—these are well-known concepts in the world of product development.

But what about the processes surrounding these product experiments? Is your team able to rely on a framework or guideline to ensure that experiments are conducted properly? If not, you might be leaving valuable insights on the table. Or, worse yet, introducing bias into your results.

In this guide, we’ll discuss the need for a product experimentation framework and explain how a product analytics tool like UXCam can help you build one.

Let's get started.

What is a product experimentation framework?

Not familiar with product experimentation in general? We recommend starting with our introductory guide—we'll wait here!

A product experimentation framework is a well-documented set of steps that product teams can use to systematize their experimentation process. It should provide clear guidance on building and testing a hypothesis, analyzing and interpreting results, and communicating those results to the rest of the organization.

Different types of product experiments

A/B testing

A/B testing (also known as split testing) is one of the most common product experiments. It involves presenting users with two slightly different versions of a screen, feature, or app and comparing their behaviors against each other.

To run A/B tests, you'll need a dedicated tool like Firebase paired with an analytics tool like UXCam to compare key metrics (e.g., conversion rates, time on page, etc.) generated by both versions.

Multivariate testing

Multivariate testing uses the same basic premise as A/B testing but involves testing versions with more (or greater) differences. For example, while an A/B test might compare the effectiveness of two different button colors, a multivariate test might simultaneously compare button placement, font size, and images.

Funnel Testing

Funnel testing is a more specific type of product experiment that looks at the user journey.

It’s designed to identify friction points in the customer journey that are leading to drop-offs (e.g., abandoned carts, churn, etc.). With a tool like UXCam, you can run experiments on funnel stages to figure out what works (and what doesn't).

How to implement a product experimentation framework

1. Define a process for identifying meaningful issues

In an ideal world, we'd experiment with every potential product issue. Sadly, we live in a world limited by workloads, budgets, and time constraints. That means you need a process for figuring out which problems are meaningful.

Here are three vectors you can use to define meaningful problems:

Likelihood: Common issues are often more important than uncommon issues—simple. Look for repeat offenders in reviews, tickets, bug reports, and testing data.

Impact: High-impact issues can massively affect ROI. Which issues lead to decreased revenue, user retention, or engagement?

Effort: Sometimes a quick win is the way to go. What requires the least amount of effort to test and implement?

From these three vectors, you can make two matrices that come in handy when prioritizing issues:

Likelihood and Impact

If you have the funds to pursue labor or resource-intensive initiatives, you should look for high-impact, high-likelihood issues.

Effort and Impact

If you're running a leaner operation, you'll want to tackle high-impact, low-effort issues first.

2. Create a process for coming up with hypotheses

Once you've identified an issue, it's time to build a hypothesis.

A good hypothesis will clearly identify what you expect to happen (and why) when the product is changed in a specific way. This hypothesis should also include information about the metric you'll use to measure success (e.g., conversion rate, time on page, etc.).

Here's a simple process for hypothesizing:

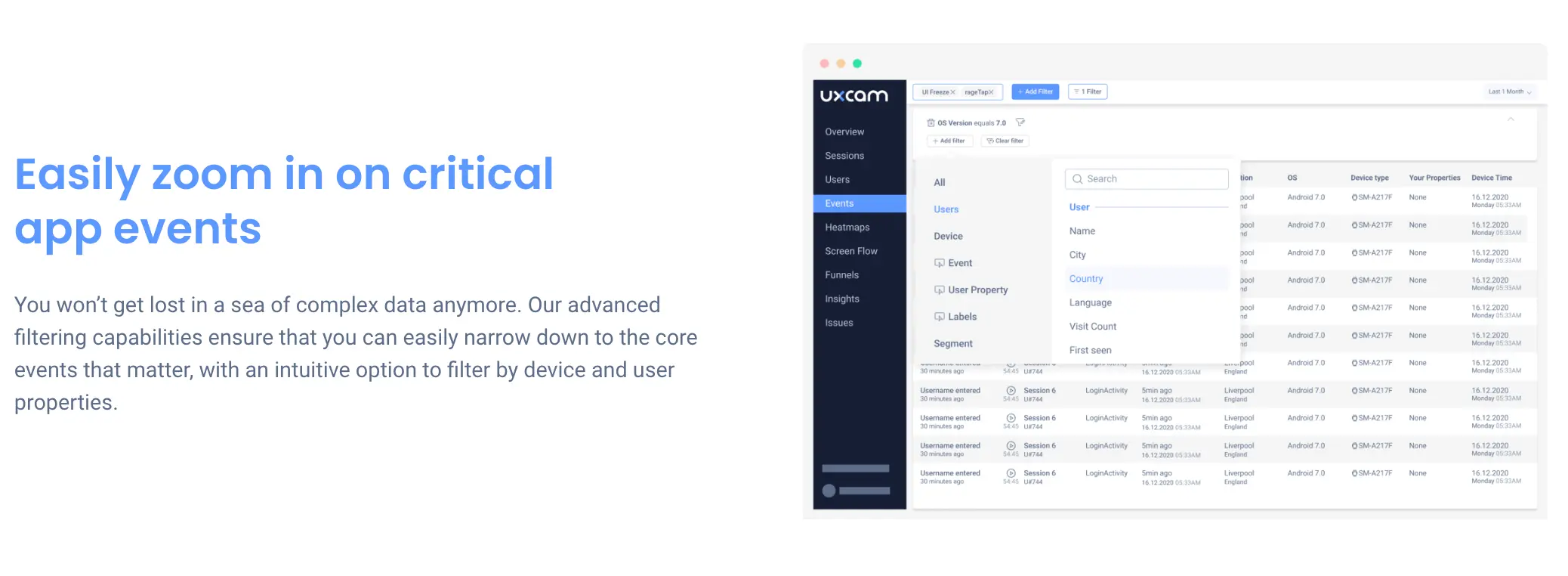

Isolate problem users

The first step is to use your product analytics tool to segment users who exhibit or fall victim to the issue you're investigating.

With UXCam, you can slice and dice your data using any number of filters (e.g., geographic location, device type, etc.). You can even create fully custom tags for segmenting and tracking user behavior.

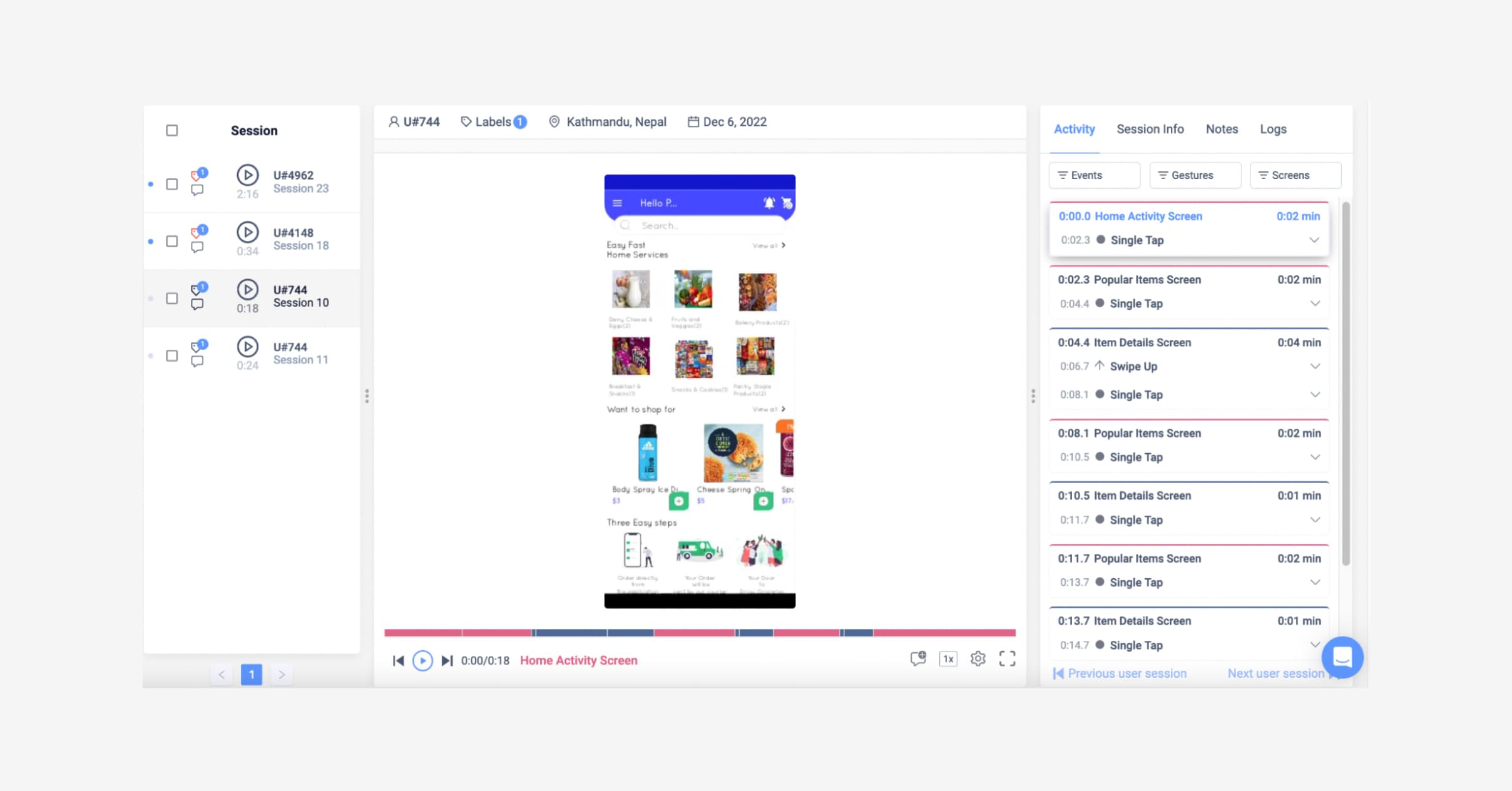

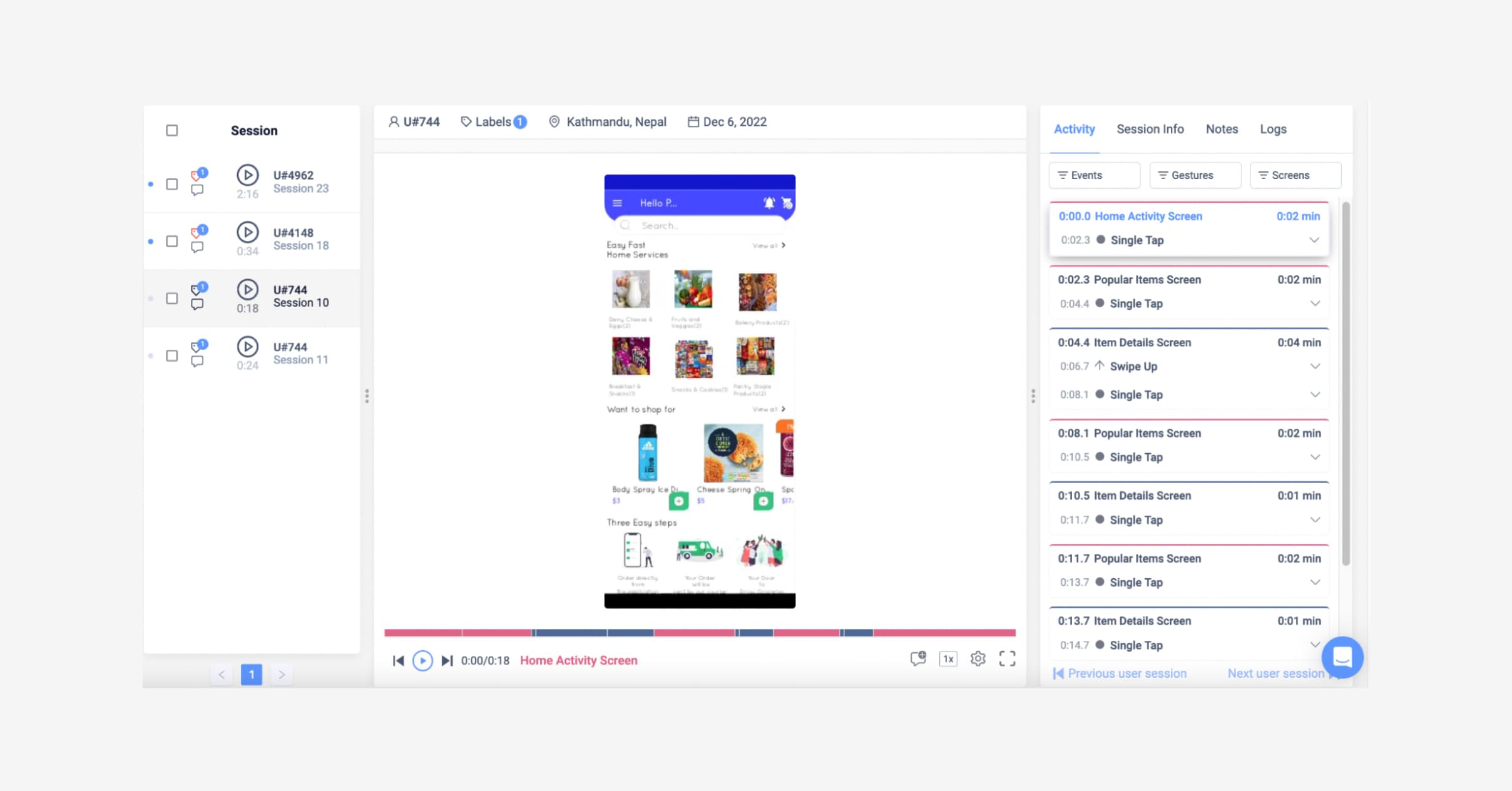

Zoom in on individual cases

Once you have a dataset, it's time to zoom in on individual users.

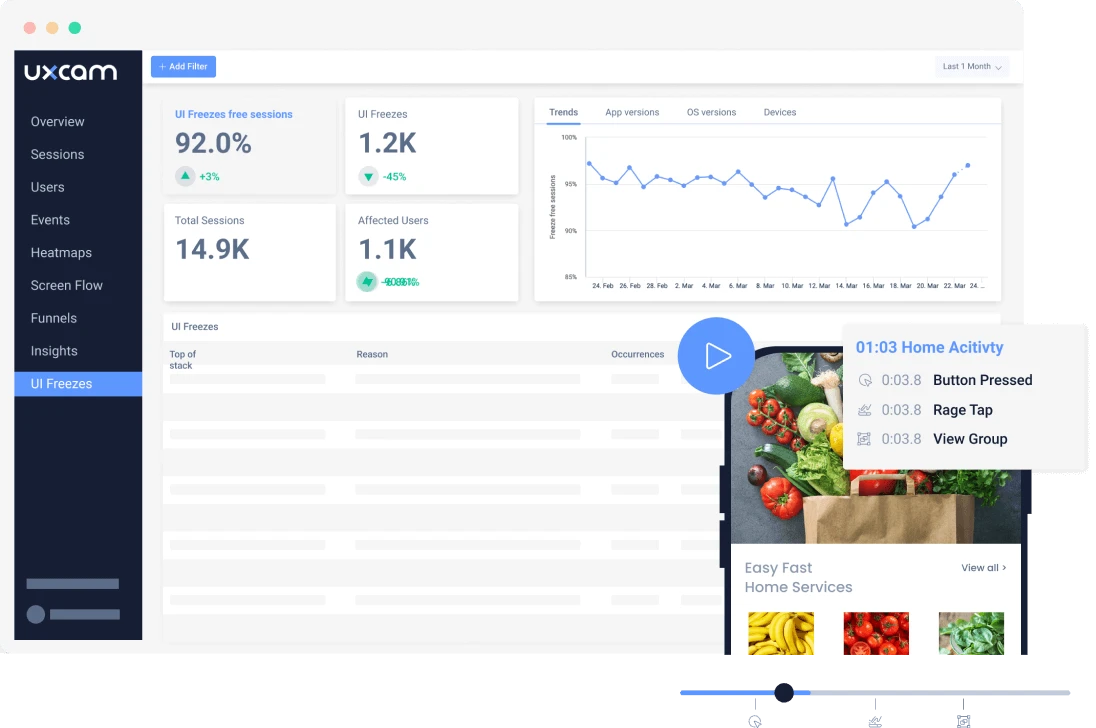

UXCam's Session Replay and Heatmap features allow you to go back in time and watch a user's entire session in full context. This will give you valuable insight into why the issue is occurring and how it can be addressed.

Look for patterns

Once you've checked out a number of sessions, patterns might start emerging.

To give a simple example, you might notice that a number of your abandoned carts are preceded by rage taps on the checkout button. Patterns like these are what you'll use to form a hypothesis—“Increasing the size of the checkout button will reduce the number of rage taps and increase conversions.”

3. Monitor metrics & analyze results

Once you've formulated your hypothesis, it's time to run an experiment—possibly one of the ones we covered at the beginning of this guide!

This involves making a change to the product and then monitoring the metrics associated with it. Experimentation tools like UXCam provide real-time analytics, so it's important to keep an eye on the numbers as they roll in.

Not sure what to track? Check out our definitive guide to product metrics and KPIs.

Once you've collected enough data, you can analyze the results and make an informed decision. If the experiment was a success, great! Roll out the change to all users. If it failed, no problem — you've still learned something valuable about your product.

Rinse and repeat!

Get more from your data with UXCam

Product experimentation works best when the processes and tools used are standardized across an organization. By following these three steps—identifying meaningful issues, building hypotheses, and monitoring metrics—you'll build a cycle of experimentation that will yield powerful results.

Want to boost your product experimentation framework?

Check out UXCam's suite of product analytics tools today. We make it easy to find meaningful issues and build powerful hypotheses that you can test quickly and easily. Get started with a 14-day free trial.

AUTHOR

Tope Longe

Product Analytics Expert

Ardent technophile exploring the world of mobile app product management at UXCam.

What’s UXCam?

Related articles

Session Replay

Mobile Session Recording: Our Complete Guide (+Tools)

Learn what mobile session recording is, why it matters, and the best tools to analyze user behavior. See how UXCam helps improve app and web...

Annemarie Bufe

Product Analytics Expert

Product Management

18 Best Product Development Software for Every Team 2026

Discover the 14 best product development software tools to streamline collaboration, track progress, collect feedback, and build better products...

Tope Longe

Product Analytics Expert

Product Management

13 Best Product Management Tools 2025 & When to Use Them

Find out the top tools that the best product managers use daily to perform better at...

Jane Leung

Product Analytics Expert