Back to blog

17 MIN READ

How to do Website Usability Testing

PUBLISHED

29 January, 2025

Product Analytics Expert

If users struggle to navigate your web app, they won’t stick around. Website usability testing helps you spot these roadblocks before they hurt engagement, conversions, or retention.

As a Product Manager or UX Designer, you need a clear understanding of how real users interact with your product. Usability testing reveals where they get stuck, confused, or frustrated—so you can fix issues before they impact business outcomes.

In this guide, you’ll learn the key steps to running usability tests, best practices to follow, and how to analyze results. Let’s dive into making your web app user experience as seamless as possible.

Website usability testing checklist

| Steps | Description |

|---|---|

| Define test objectives | Set clear usability goals and measurable success criteria. |

| Recruit the right participants | Recruit participants who match target users in experience and device preferences. |

| Choose the testing environment | Select moderated/unmoderated and remote/in-person testing based on research needs. |

| Create realistic tasks & scenarios | Design real-world tasks that reflect user workflows and pain points. |

| Establish key usability metrics | Track task success, time on task, errors, navigation patterns, and drop-offs. |

| Guide participants without bias | Avoid leading questions, unintentional cues, and influence on participant decisions. |

| Observe & record user behavior | Monitor hesitation, backtracking, misclicks, and frustration for usability issues. |

| Collect post-test feedback | Ask open-ended questions to uncover user expectations and pain points. |

| Analyze findings & prioritize fixes | Identify recurring issues, compare metrics with feedback, and prioritize fixes. |

| Implement changes & retest | Validate changes through follow-up tests and track real-world impact. |

What is website usability testing?

Website usability testing is the process of evaluating how easily users can navigate and interact with your web app. It helps you understand whether your product is intuitive, efficient, and aligned with user expectations.

The goal is simple: make sure your website or web product is easy to use. If users struggle to complete key tasks—like signing up, finding information, or making a purchase—you’re losing engagement and potential revenue. Usability testing helps you fix these friction points before they become major issues.

Why does website usability testing matter?

Your web app might look great, but if users struggle to complete tasks, they won’t stick around. Website usability testing helps you identify and fix usability issues before they drive users away. A seamless experience keeps people engaged, reduces churn, and builds long-term loyalty. Let's look at some key benefits of usability testing;

Reduce churn by eliminating friction

Every unnecessary click, confusing menu, or unclear CTA increases the risk of users abandoning your web app. Friction leads to frustration, and frustration leads to churn.

Usability testing reveals where users get stuck, helping you refine navigation, optimize page layouts, and ensure workflows are intuitive. The fewer obstacles users encounter, the more likely they are to complete actions—whether it’s signing up, purchasing, or engaging with your product.

Build trust and credibility

A poorly designed experience makes users question your product’s reliability. If they struggle to use your app, they won’t hesitate to look for alternatives.

A well-optimized web app creates a sense of trust. When users can complete tasks effortlessly, they feel more confident in your product and are more likely to return. Usability testing ensures your design meets expectations, reinforcing credibility and brand loyalty.

Make data-driven design decisions

Relying on assumptions about user behavior can lead to poor design choices. Usability testing provides real-world insights, showing how users interact with your app in practical scenarios.

By analyzing usability test data, you can validate which design elements work and which need improvement. Instead of making decisions based on internal preferences, you get user-centered, data-backed insights that drive measurable improvements.

Enable continuous product improvement

A web app is never truly “finished.” User expectations change, new features are added, and competitors raise the bar. Usability testing helps you stay ahead by making iterative improvements based on actual user behavior.

By regularly testing and refining, you ensure your web app evolves with user needs, rather than falling behind. A seamless experience today doesn’t guarantee success tomorrow—ongoing usability testing keeps your product competitive.

Enhance accessibility to reach a wider audience

A web app that’s only usable for a subset of users is limiting its growth potential. If people with disabilities or diverse browsing preferences struggle to navigate your product, you’re missing out on a huge market segment and potentially violating accessibility standards.

Usability testing helps uncover accessibility gaps that might otherwise go unnoticed. It ensures users with screen readers, motor impairments, or color vision deficiencies can interact with your site effectively.

Next, we’ll break down the essential components of an effective usability test, so you can start improving your web app’s user experience with confidence.

How to conduct a website usability test (step by step)

Running a website usability test requires a structured, strategic approach to ensure you capture meaningful insights. Whether you’re evaluating a prototype, refining a newly launched feature, or optimizing an existing web app, following these steps will help you pinpoint usability issues, improve user experience, and boost engagement.

Each step in this process ensures that usability testing delivers actionable data—not just surface-level observations. Let’s break it down.

Step 1: Define your test objectives

Before running a website usability test, you need to clearly define what you’re testing and why. Without a focused objective, your findings may be too broad or lack actionable insights, leading to ineffective improvements.

Your usability test should aim to answer specific, measurable questions about your web app’s user experience, such as:

Navigation & information architecture: Do users understand the menu structure and can they find key pages without confusion?

Task completion & workflow efficiency: Can users complete critical tasks, such as signing up, upgrading a subscription, or completing a purchase, without unnecessary friction?

Clarity & comprehension: Are call-to-action buttons, form labels, and error messages clear and intuitive?

Conversion funnel optimization: Where do users drop off in the sign-up, checkout, or onboarding flow? What’s causing abandonment?

By focusing on one or more of these areas, you ensure that your usability test provides meaningful insights that lead to direct improvements.

How to set measurable usability goals

A strong usability test objective is not just a broad question—it should include specific success criteria that help you measure whether an issue exists and how severe it is.

Example of a weak usability objective: "Check if users like the new checkout process."

Example of a strong usability objective: "Measure if users can complete the checkout process in under 2 minutes without needing to retry form fields."

By defining specific usability success benchmarks (e.g., time on task, error rates, or conversion rates), you ensure the test delivers actionable, data-backed insights rather than vague feedback.

Without clear usability test objectives, you risk wasting time analyzing unstructured feedback that doesn’t contribute to real improvements. Defining precise goals from the start allows you to focus on fixing what actually impacts user experience, engagement, and conversions.

Step 2: Recruit the right participants

The quality of your usability test results depends entirely on who participates. If you’re testing with users who don’t match your actual audience, their feedback may be irrelevant—or worse, misleading.

Who should you recruit?

To get insights that accurately reflect real-world user behavior, choose participants who represent your target user base. Consider the following factors:

User personas: Align participants with your ideal customer profiles, whether they are first-time users, power users, enterprise clients, or eCommerce shoppers.

Experience level: If you’re testing onboarding flows, focus on new users. If you’re refining advanced features, test with experienced users.

Device & browser diversity: Ensure your usability test includes users across desktop, mobile, tablet, and various browsers to capture different experiences.

For example, if you’re testing an eCommerce checkout flow, the ideal participants would be:

Users who frequently shop online and are familiar with standard checkout processes.

A mix of mobile and desktop users to ensure the experience works well across devices.

People from your target regions, especially if localization or language differences affect usability.

If your web app is designed for B2B teams, then testing with casual consumers won’t yield relevant insights. Instead, focus on product managers, IT admins, or UX designers—whoever matches your real user base.

How many participants do you need?

The right sample size depends on your testing method—for qualitative insights, fewer participants are enough, but for measuring usability metrics, larger groups provide more reliable data.

For basic usability insights: 5–8 participants can uncover major usability flaws (as per Nielsen Norman Group's research).

For deeper quantitative analysis: 20–30 participants help validate findings with statistically significant data.

Where to find the right test participants

If you already have an existing user base, recruiting participants is easier. But if you need external testers, consider these sources:

Beta testers & early adopters: Engage loyal customers who actively provide feedback.

Recruitment platforms: Services like UserTesting, Respondent.io, or Maze can connect you with test participants from your target audience.

Social media & industry communities: LinkedIn, Reddit, and specialized Slack groups can be useful for finding niche testers.

Customer feedback loops: Reach out to users who have previously submitted support tickets or feature requests—they often provide valuable insights.

Avoid these common participant mistakes

Testing with the wrong demographic: A usability test for a SaaS analytics tool won’t be useful if participants are casual web users instead of product managers or data analysts.

Ignoring device and browser testing: Testing only on desktop won’t reveal mobile-specific UX problems, like poor tap targets or hidden navigation menus.

Only testing internally: Internal team members are too familiar with the product and won’t provide objective feedback.

Step 3: Choose the right testing environment

The usability testing environment you choose has a direct impact on the quality and reliability of your findings. Selecting the wrong approach could lead to biased insights, incomplete data, or results that don’t translate into real-world improvements.

Your decision should be based on:

Your budget and available resources– Some testing methods require facilitators, dedicated software, and scheduling, while others can be automated.

Your research objectives – If you need deep qualitative insights, a structured environment is necessary. If you’re gathering large-scale usability data, a lightweight, remote approach might be more effective.

How closely you want to mimic real-world usage – Testing under natural conditions ensures that user behavior is authentic and unbiased.

Now, let’s explore moderated vs. unmoderated testing, and whether remote or in-person usability testing is right for you.

Choosing the right testing environment for your needs

| Testing Type | Best For | Challenges |

|---|---|---|

| Moderated | Deep qualitative insights, uncovering hidden usability issues | Time-consuming, may influence user behavior |

| Unmoderated | Large-scale feedback, unbiased interactions | Lacks real-time questioning, possible misinterpretation of tasks |

| Remote | Natural user behavior, cost-effective, scalable | Less control over variables, limited troubleshooting |

| In-Person | High-control, deeper behavioral insights | Expensive, not always representative of real-world use |

Choosing the right testing environment depends on your usability goals, budget, and timeline. Once you’ve decided on the best approach, the next step is designing realistic usability test tasks that mirror actual user behavior.

Moderated vs. unmoderated usability testing

The choice between moderated and unmoderated usability testing depends on the depth of insights needed and available resources. Moderated testing allows real-time probing and deeper qualitative feedback, while unmoderated testing is faster, more scalable, and cost-effective. Below is a quick comparison:

| Aspect | Moderated Usability Testing | Unmoderated Usability Testing |

|---|---|---|

| Definition | Facilitator guides and observes participants. | Participants complete tasks independently. |

| Best for | Complex issues, qualitative feedback, early-stage testing. | Large-scale testing, unbiased behavior, quick feedback. |

| Challenges | Time-consuming, costly, observer effect. | No follow-ups, risk of misinterpreted tasks. |

Remote vs. in-person usability testing

Choosing between remote and in-person usability testing depends on your goals, budget, and need for control. Remote testing provides natural user interactions, scalability, and cost savings, while in-person testing offers deeper insights through body language and real-time support. Below is a quick comparison:

| Aspect | Remote Usability Testing | In-Person Usability Testing |

|---|---|---|

| Definition | Users test in their own environment. | Testing occurs in a controlled setting. |

| Best for | Real-world usage, broad reach, cost-effectiveness. | High-risk designs, body language analysis, technical support. |

| Challenges | Less control, no real-time troubleshooting. | Time-consuming, costly, less natural behavior. |

Step 4: Create realistic tasks and scenarios

A usability test is only valuable if it replicates how users actually interact with your web app. Instead of simply asking users to explore the site, create structured tasks that reflect common goals, workflows, and pain points.

But first, why task design matters? Let's dive in;

Unstructured tasks lead to vague insights. Asking users to "browse the homepage" won’t tell you if they can complete key actions efficiently.

Real-world scenarios uncover genuine usability issues. If an eCommerce shopper struggles to find a product or apply a discount code, it signals friction points that impact revenue.

Users behave differently based on context. Testing an admin panel for a SaaS platform requires different task structures than testing a checkout flow for an online store.

How to design effective usability test tasks

1. Align Tasks with User Goals: Identify common user objectives based on real-world use cases. Your test tasks should match actual workflows to capture usability friction.

SaaS web app: Sign up for an account, locate the dashboard, and invite a team member.

E-commerce platform: "Find a pair of sneakers under $100, add them to your cart, and check out using PayPal.

Subscription-based product: Upgrade from a free plan to a premium subscription and review your new billing details.

2. Keep tasks clear and unbiased: Avoid vague instructions. Instead of "See how the checkout process works," use "Purchase a laptop and apply a discount code before completing checkout." It's also important to use neutral words. For example, instead of "Find the easy-to-use settings menu," use "Change your notification preferences from the settings page."

3. Incorporate expected user challenges: Anticipate potential usability pain points in your test scenarios. If a SaaS product has a complex onboarding flow, create a task that evaluates if users can complete onboarding without external help.

Step 5: Establish key usability metrics

Usability testing is only valuable if it leads to clear, actionable insights. While direct user feedback is helpful, it’s often subjective. To truly understand where users struggle and why, you need to track quantitative usability metrics alongside qualitative observations.

Measuring the right usability metrics allows you to:

Identify usability bottlenecks that prevent users from completing key actions.

Track improvements over time by comparing test results before and after design updates.

Validate user feedback with real behavior data, rather than relying on assumptions.

The most important usability metrics fall into five key categories: task success rate, time on task, error rate, navigation patterns, and drop-off points.

Task success rate: are users completing key actions?

A high task success rate means users can easily complete the intended actions without confusion. A low success rate signals usability issues that may be blocking conversions, sign-ups, or purchases.

For example, if 80% of users fail to complete checkout, there may be issues with form clarity, payment options, or error handling. Tracking task success over multiple tests helps identify improvements or worsening usability problems.

Time on task: how efficient is the user experience?

Speed matters, but context is key. If a user completes a task too quickly, they may be skipping important steps. If they take too long, they might be struggling with confusing navigation or inefficient workflows.

For instance, if new users take twice as long as experienced users to complete a task, onboarding improvements might be necessary. Comparing time-on-task metrics across different test groups helps pinpoint where efficiency can be improved.

Error rate: how often do users make mistakes?

Frequent errors can indicate usability friction. Whether it’s misclicking the wrong button, entering incorrect form data, or struggling with unclear instructions, tracking error rates helps identify where users are experiencing avoidable frustration.

If users repeatedly input incorrect passwords because there’s no "Show Password" option, reducing errors might be as simple as adding visibility toggles. Monitoring these patterns helps refine interface clarity and usability.

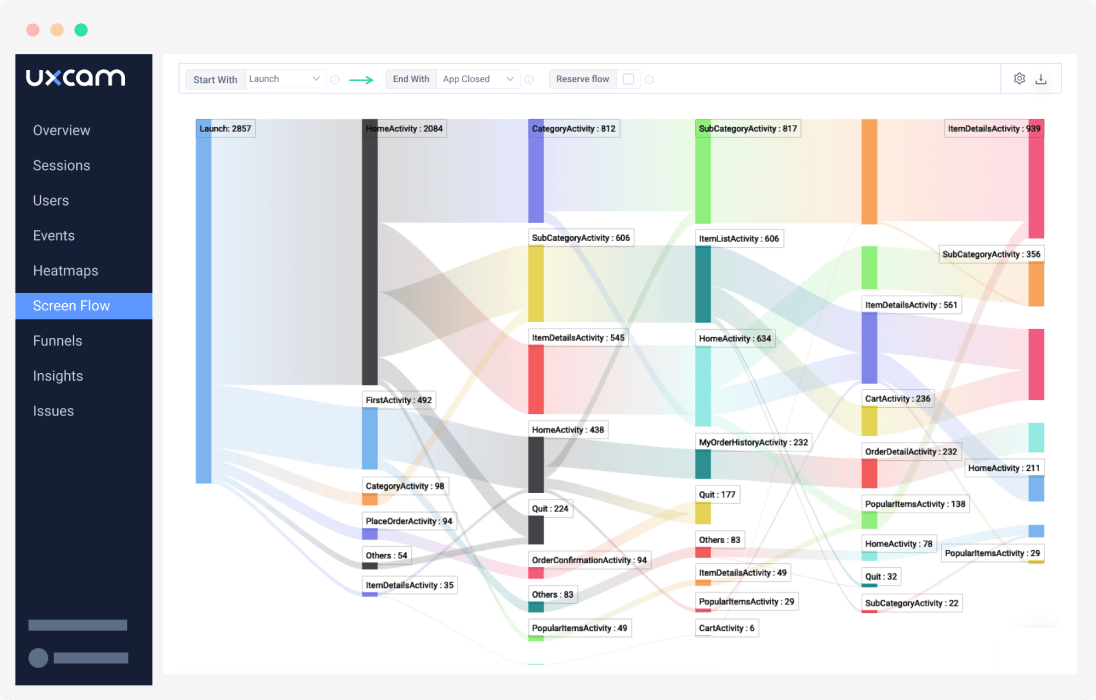

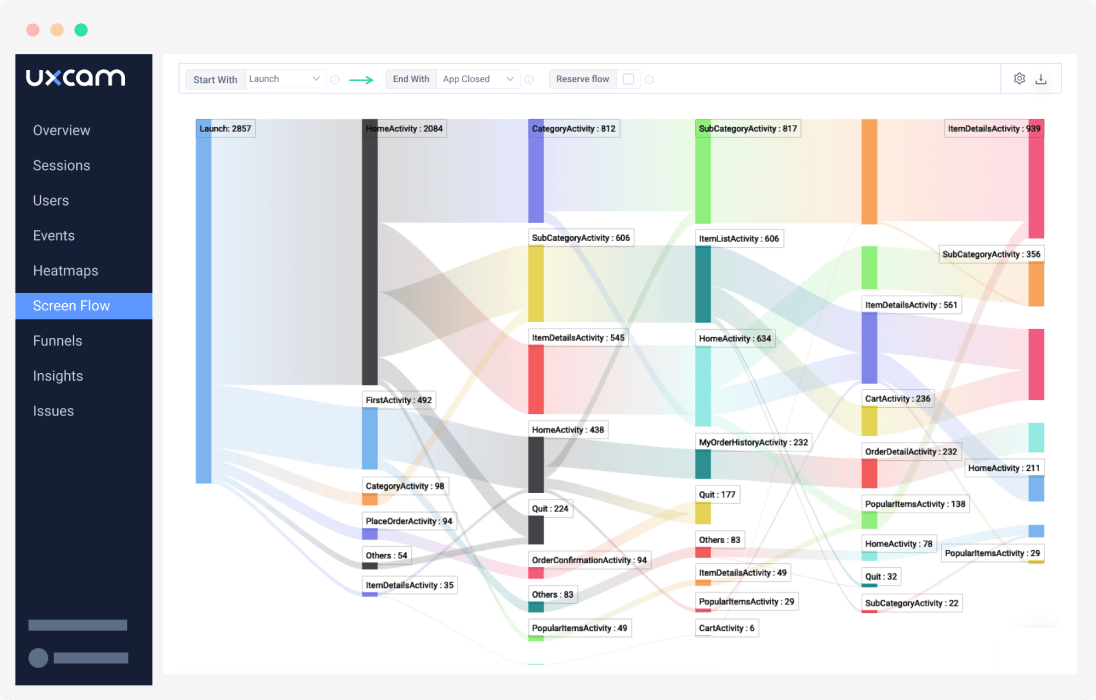

Navigation patterns: are users moving through the site as intended?

Even if users eventually reach their goal, their navigation path can reveal hidden usability issues. If they hesitate, backtrack, or take an inefficient route, it may signal poor information architecture or unclear wayfinding.

For example, if users frequently return to the homepage while searching for settings, they may not recognize the settings icon or find the menu intuitive. Heatmaps, session replays, and click tracking can help uncover unexpected navigation struggles.

Drop-off points: where are users abandoning the experience?

Tracking where users exit a process before completion is critical for improving conversion rates. If users abandon sign-up forms at the password creation step, it could mean requirements are too complex. If checkout drop-offs spike at the payment page, unexpected costs or unclear options might be to blame.

Understanding why users leave is key to fixing high-impact usability problems that affect retention and business outcomes.

Relying solely on user interviews can lead to subjective feedback and false positives. Combining usability metrics with real-world observations ensures that improvements are data-driven rather than based on assumptions. By tracking usability metrics consistently, you can measure progress, validate design changes, and create a seamless user experience.

Step 6: Guide participants without bias

The way you interact with participants during a usability test can directly influence their behavior. If users sense they are being evaluated or guided toward a particular response, the results may be unreliable.

To capture authentic user interactions, you must create a neutral testing environment where participants feel comfortable exploring the interface on their own terms.

Set expectations without leading the user

At the start of the session, clearly explain:

The goal is to evaluate the usability of the website, not the user’s performance.

There are no right or wrong answers, and struggles are just as valuable as successes.

Participants should think out loud so you can understand their thought process.

Avoid phrasing that subtly influences user behavior. For example, instead of asking:

"Did you find the checkout button easy to locate?" → Ask, "How would you complete a purchase?"

This small difference prevents leading the user toward a specific response and ensures they navigate naturally.

Avoid unintentional cues

Even non-verbal gestures can impact a user’s decision-making. If a facilitator nods approvingly when a user selects a button, the participant may assume they made the correct choice, even if they were confused.

To maintain neutrality:

Keep facial expressions and reactions neutral —even if the user struggles.

Resist the urge to correct or guide users during the test.

Ask open-ended follow-up questions instead of confirming their actions.

For example, instead of saying "Yes, that’s correct," ask:

"What made you decide to click there?"

"What did you expect to happen?"

These subtle adjustments help ensure genuine usability issues are uncovered, rather than allowing users to rely on facilitator feedback.

Step 7: Observe and record user behavior

Once the usability test begins, the most valuable insights often come from observing what users do, not just what they say. Many usability problems go unnoticed because users don’t always articulate their struggles.

Carefully tracking user interactions helps identify hidden usability friction points that surveys or direct feedback might miss.

Watch for hesitation and uncertainty

If a user pauses frequently, re-reads text, or hovers their cursor indecisively, they may be unsure about the next step. This can signal:

Unclear instructions that need better copywriting.

Low visual hierarchy making it hard to distinguish primary actions.

Ambiguous UI elements that require redesigning.

Tracking these moments helps uncover where cognitive friction is slowing users down.

Look for backtracking and misclicks

Users who repeatedly click the wrong button, navigate back and forth, or abandon a process are likely experiencing confusion. This might indicate:

A misleading CTA label (e.g., users clicking "Continue" when they expected "Submit").

A misplaced action button that isn’t visually prominent.

A missing confirmation step that leaves users unsure if an action was successful.

These behavioral cues highlight interface pain points that might not surface in direct feedback.

Track signs of frustration

Non-verbal cues—such as sighing, fidgeting, or rapidly clicking without effect—often indicate frustration. If multiple users display similar behaviors at the same stage of the test, it’s a strong signal that something in the interface is causing unnecessary friction.

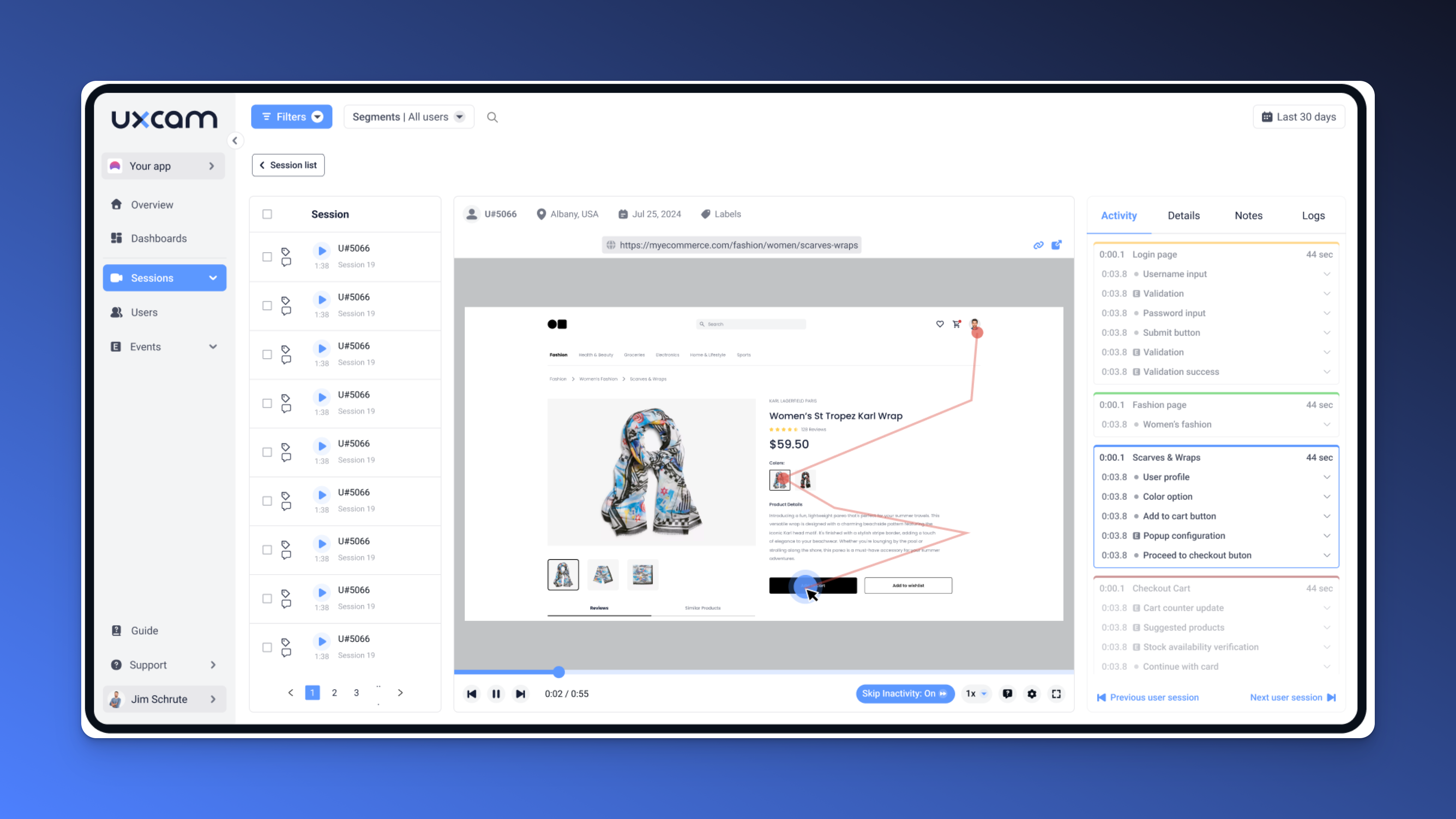

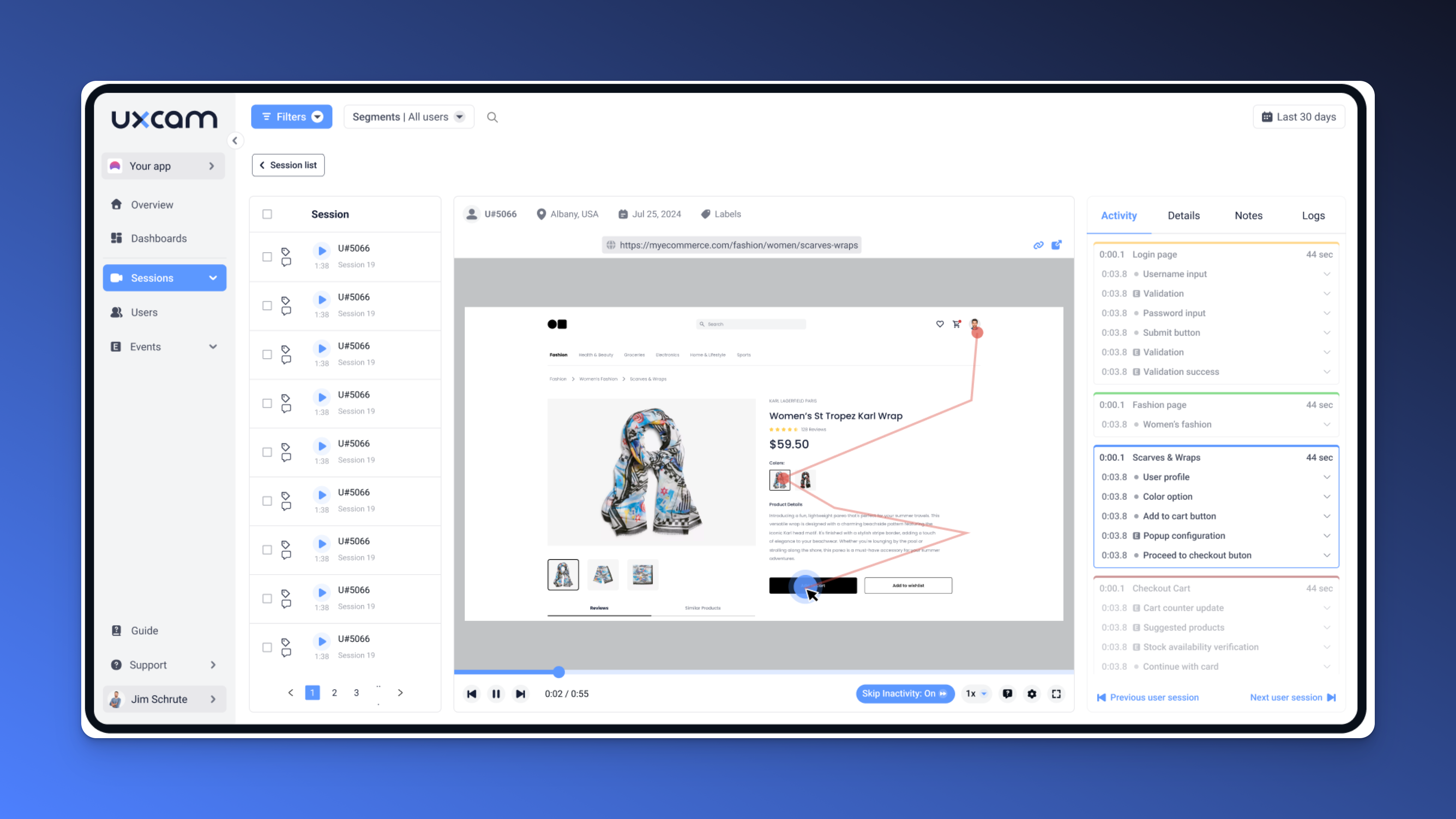

Using session recordings and heatmaps allows you to analyze patterns across multiple users, helping prioritize which frustration points need immediate fixes.

Observation is key

People often don’t realize when they are struggling with a user interface. If you only rely on user-reported feedback, you may miss critical usability barriers.

By carefully observing:

Where users hesitate, backtrack, or misclick, you gain insights into navigation pain points.

Which interactions cause frustration, you can identify UI elements that need redesigning.

How users react non-verbally, you uncover usability issues they may not even be aware of.

Pairing direct observations with usability metrics ensures that design decisions are based on real user behavior, not just opinions.

Step 8: Collect post-test feedback

Completing a usability test is just the first step; understanding why users struggled is what turns observations into actionable improvements. Once a participant finishes the assigned tasks, it’s important to gather qualitative feedback to uncover what worked, what didn’t, and what felt confusing.

Numbers and analytics can tell you where users encountered friction, but they don’t always explain why. This is where post-test discussions come in. By asking the right questions, you gain insights into users' thought processes, expectations, and frustrations.

Instead of overwhelming participants with a long questionnaire, focus on a few open-ended but targeted questions. Asking “What did you find most frustrating or confusing?” often leads to surprising revelations—users may mention issues that weren’t immediately obvious during observation. Another useful question is “Was there anything unclear or unexpected?” which helps pinpoint areas where user expectations didn’t match reality.

It’s also helpful to ask about their overall experience—whether it felt smooth or frustrating, efficient or cumbersome. This gives you a broader understanding of how intuitive the interface feels. If users hesitate before answering, that itself is a clue that something in the experience wasn’t quite right.

Post-test feedback bridges the gap between raw usability data and user perception, giving context to the numbers. If multiple users mention the same frustration, that’s a strong indication that the issue is not an isolated case—it’s something that needs to be fixed.

Step 9: Analyze findings and prioritize fixes

Once usability testing is complete, the real work begins. You now have a mix of quantitative data (success rates, task times, drop-offs) and qualitative insights (user frustrations, confusion points). The challenge is turning this information into a clear action plan.

Start by looking for recurring usability issues. If several users struggled with the same task, that’s a high-priority problem. For example, if multiple participants got stuck on the checkout page, it’s a sign that something about the process—perhaps unclear payment options or an unexpected extra step—is causing friction.

Next, compare usability metrics with user feedback. If heatmaps and session recordings show a high drop-off at a particular step, does that align with users reporting that they “felt lost” or “weren’t sure what to do next”? When feedback and data reinforce each other, you have strong evidence that a fix is needed.

Not all usability problems are equal, so prioritization is key. A structured approach can help:

High-impact, low-effort fixes (like renaming a confusing button or improving form validation) should be addressed first.

More complex issues (like redesigning an onboarding flow) might require further testing and iteration.

A clear prioritization strategy ensures that fixes are not just reactive but strategically focused on improving the most critical parts of the user experience.

Step 10: Implement changes and retest

Fixing usability issues isn’t the final step—it’s just another phase in the continuous improvement cycle. A usability fix isn’t successful unless it’s been validated through further testing. Just because something makes sense to the design team doesn’t mean it will translate into a better user experience.

The best way to measure post-fix success is to compare before-and-after usability metrics. Has the task success rate improved? Are users completing key actions faster? Have drop-offs decreased? These are clear indicators that the usability improvement had the intended effect.

But numbers alone aren’t enough. Running follow-up usability tests with new participants ensures that the changes work not just for those who originally encountered issues, but for a broader range of users. Observing real interactions with the updated interface helps confirm whether the changes truly resolved the problems—or if new friction points emerged.

Beyond controlled usability tests, tracking long-term user behavior in a live environment is equally important. Product analytics, session recordings, and support inquiries can provide ongoing insights into whether the improvements are holding up in real-world usage. If users still submit the same support tickets about a feature even after an update, it’s a sign that something is still unclear.

Conclusion and next steps

Effective website usability testing isn’t just about running a test—it’s about systematically identifying, analyzing, and improving the user experience. By carefully planning each step, from defining objectives to gathering feedback and iterating on changes, you ensure that usability insights translate into real-world improvements.

Usability testing is not a one-time effort but a continuous practice. As user expectations evolve and new features are introduced, ongoing testing helps keep your web app intuitive, efficient, and aligned with user needs. Combining direct user feedback with product analytics allows you to uncover hidden friction points, measure impact, and refine the experience for long-term growth and engagement.

To take usability testing even further, UXCam’s session replays and funnel analytics provide deeper insights into user behavior. Try UXCam for free today and start making data-driven usability improvements that enhance your web app’s performance and user satisfaction. Get started for free.

You might also be interested in these;

What is Web Analytics? Definition, Metrics & Best Practices

Website Visitor Tracking - A Comprehensive Guide

Top 10 Digital Analytics Tools You Need to Know

Ultimate Website Optimization Guide: Must-Know Tactics

Website Analysis 101: How to Analyze for Peak Performance

FREE Usability Testing Templates and How To Use Them

Remote Usability Testing Tools

AUTHOR

Tope Longe

Product Analytics Expert

Ardent technophile exploring the world of mobile app product management at UXCam.

What’s UXCam?

Related articles

Website Analysis

Website Heatmap - Ultimate Guide and Best Tools [2026]

Read the ultimate 2026 guide to website heatmaps: discover types, implementation, and the best heatmap tools to optimize user experience and site's...

Jonas Kurzweg

Product Analytics Expert

Website Analysis

Best Free Web Analytics Tools [2026]

Compare the best free web analytics tools for UX, product, and privacy. See why UXCam is the top choice for behavioral insights, compliance, and...

Jonas Kurzweg

Product Analytics Expert

Website Analysis

What is Web Analytics? Definition, Metrics & Best Practices

Discover what web analytics is, its definition, key examples, and the best tools to optimize your website's performance and user...

Tope Longe

Product Analytics Expert